Welcome to MUCalc

The Leverhulme Research Centre for Forensic Science Measurement Uncertainty Calculator (MUCalc) is an application for calculating measurement uncertainty in accordance with the standards of International Organization for Standardization ISO/IEC 17025.

This version (v3.1.0) of the software computes uncertainty components for Homogeneity, Method Precision, Calibration Standard, Sample Volume and Calibration Curve with the Calibration Curve assumed to be linear. If data is uploaded for all components, the Combined Uncertainty is computed using all components. An uncertainty component can be excluded from the Combined Uncertainty by simply not uploading any data for that component.

Once data is uploaded, a step by step computation and details of all formulas used can be accessed by clicking on the respective uncertainty component tab displayed at the left hand side of the screen. Each uncertainty component has three main tabs; Overview, Method and Step by Step Calculations. Together these give more detailed information about the approach used.

Additionally a Homogeneity Test details calculation for testing whether there is a statistically significant difference between group means of samples using a one-way analysis of variance (ANOVA).

Case Sample Data

Specify below the number of replicates and mean concentration for the case sample.

Coverage Factor \((k)\)

A coverage factor can be automatically calculated by specifying a Confidence Level below, or overridden by a manually specified Coverage Factor.

The specified Confidence Level percentage probability will be used to read the appropriate Coverage Factor from a t-distribution table, whereas a manually specified Coverage Factor will take precedence over the specified Confidence Level and be used explicity for the Expanded Uncertainty calculation.

Replicates \((r_s)\)

Mean Concentration\((x_s)\)

Results

Download Report

For reporting purposes and report can be downloaded and stored for all the results uploaded.

Download ReportUncertainty of Homogeneity

Overview

Homogeneity/heterogeneity as defend in EURACHEM/CITAC guide (2019) and IUPAC (1990) is:

"The degree to which a property or constituent is uniformly distributed throughout a quantity of material.

Note:

- A material may be homogeneous with respect to one analyte or property but heterogeneous with respect to another.

- The degree of heterogeneity (the opposite of homogeneity) is the determining factor of sampling error."

The uncertainty of homogeneity (2001) quantifies the uncertainty associated with the between-group homogeneity where differences among sample groups are of interest. Detailed step-by-step calculations are displayed here with the main formulas used to compute the uncertainty of homogeneity shown in the Method tab.

To find out whether your samples are homogenous or heterogenous, the Homogeneity Test tab displays a detailed calculation for testing whether there is a statistically significant difference between group means of samples using a one-way analysis of variance (ANOVA) on the assumption that samples are normally distributed, have equal variance and are independent. For more information see Veen et al: Principles of analysis of variance (2000) and Veen et al: Homogeneity study (2001).

Method

The Relative Standard Uncertainty of homogeneity is given by the following calculation:

$$u_r(\text{Homogeneity}) = \frac{ \text{Standard Uncertainty} }{ \text{Grand Mean} } = \frac{ u(\text{Homogeneity}) }{ \overline{X}_T } $$

where \(\displaystyle u(\text{Homogeneity}) = \text{max}\{u_a,u_b\}\),

\(\displaystyle u_a(\text{Homogeneity}) = \sqrt{\frac{ MSS_B - MSS_W }{ n_0 }}\)and\(\displaystyle u_b(\text{Homogeneity}) = \sqrt{ \frac{ MSS_W }{ n_0 } } \times \sqrt{ \frac{ 2 }{ k(n_0-1) } }\)

A one-way analysis of variance (ANOVA) test is carried out to calculate the Mean Sum of Squares Between groups (\(MSS_B\)) and the Mean Sum of Squares Within groups (\(MSS_W\)) given by:

\(\displaystyle MSS_B = \frac{ \sum\limits_{j=1}^k n_j(\overline{X}_{j}-\overline{X}_T)^2 } { k-1 } \) and \(\displaystyle MSS_W = \frac{ \sum\limits_{j=1}^k\sum\limits_{i=1}^{n_j} (X_{ij}-\overline{X}_j)^2 } { N-k }\)

- \(k\) is the number of groups.

- \(n_j\) is the number of measurements/replicates in the group \(j\) where \(j=1\ldots k\).

- \(\displaystyle n_0 = \frac{1}{k-1} \left[\sum\limits_{j=1}^k n_j - \frac{ \sum\limits_{j=1}^k n_j^2 } { \sum\limits_{j=1}^k n_j }\right] = \frac{1}{k-1} \left[ N - \frac{A}{N} \right] \)

Where all \(n_j\)'s are the same (i.e. \(n_1=n_2=\ldots=n_k=n\)) then this simplifies to \(n_0 = n\). - \(N\) is the total number of measurements, i.e. \(N = \sum\limits_{j=1}^k n_j\).

- \(A\) is the sum of squared number of measurements/replicates in the group, i.e. \(A = \sum\limits_{j=1}^k n^2_j\)

- \(X_{ij}\) is the \(i^{th}\) measurement of the \(j^{th}\) group.

- \(\overline{X}_j\) is the mean of measurement in group \(j\).

- \(\overline{X}_T\) is the grand mean, calculated as the sum of all measurements \(\left(\sum\limits_{j=1}^k\sum\limits_{i=1}^{n_j} X_{ij}\right)\) divided by the number of measurements \((N)\).

Calculations for Sum of Squares Between

Parameters

Grand Mean

Mean Sum of Squares Between (\(MSS_B\))

Calculations for Sum of Squares Within

The values in the table below are calculated using \((X_{ij}-\overline{X}_j)^2\), which are summed to give the Sum of Squares Within.

Mean Sum of Squares Within (\(MSS_W\))

Standard Uncertainty (\(u\))

Relative Standard Uncertainty (\(u_r\))

Homogeneity Test

Overview

A one-way analysis of variance (ANOVA) is used to test the null hypotheses \((H_0)\) of equality of means among sample groups against the alternative hypothesis \((H_1)\) that at least two of the group means differ, on the assumption that samples are normally distributed, have equal variance and are independent. For \(k\) independent groups with means \(m_1 \ldots m_k\), the hypothesis of interest is given by:

\(H_0\): \(m_1 = m_2 = m_3= \ldots = m_k\)

\(H_1\): \(m_l \neq m_j\) for some \(l,j\)

The test statistic under \(H_0\) follows an F-distribution \((F_{\large s})\) given by the ratio of mean sum of squares between \((MSS_B)\) and mean sum of squares within \((MSS_W)\), as shown in the Method tab.

The \(H_0\) is rejected if the F statistic \((F_{\large s})\) is greater than the F critical (\(F_c\)) value for a given alpha level and conclude that at least two group means differ. For more information see Veen et al: Principles of analysis of variance (2000) and Veen et al: Homogeneity study (2001).

An alph value \((\alpha)\) of 0.05 (relating to a confidence level \((CL_H)\) of 95%) is used by default, however, this can be changed below.

Confidence Level \((CL_H\%)\)

Method

A one-way analysis of variance (ANOVA) test is carried out where the Mean Sum of Squares Between (\(MSS_B\)) is defined as:

$$MSS_B = \frac{ \sum\limits_{j=1}^k n_j(\overline{X}_{j}-\overline{X}_T)^2 } { k-1 },$$

the Mean Sum of Squares Within (\(MSS_W\)) is defined as:

$$MSS_W = \frac{ \sum\limits_{j=1}^k\sum\limits_{i=1}^{n_j} (X_{ij}-\overline{X}_j)^2 } { N-k }$$

and the F statistic (or F value) is given by:

$$F_{\large s} = \frac{MSS_B}{MSS_W}$$

Finally, \(H_0\) is rejected if \(F_{\large s} > F_c\)

- \(H_0\) is the null hypotheses of equal group means.

- \(k\) is the number of groups.

- \(n_j\) is the number of measurements/replicates in the group \(j\) where \(j=1\ldots k\).

- \(N\) total number of measurements, i.e. \(N = \sum\limits_{j=1}^k n_j\)

- \(F_{\large s}\) is the F statistic calculated from the supplied data.

- \(F_c\) is the critical value from F-Distribution Table at a given alpha level, i.e. \(F_{{\LARGE\nu}_B,{\LARGE\nu}_W,\alpha}\)

- \({\LARGE\nu}_B\) is the degrees of freedom for the between-group, calculated as \(k-1\).

- \({\LARGE\nu}_W\) is the degrees of freedom for the within-group, calculated as \(N-k\).

- \(X_{ij}\) is the \(i^{th}\) measurement of the \(j^{th}\) group.

- \(\overline{X}_j\) is the mean of measurement in group \(j\).

- \(\overline{X}_T\) is the grand mean of all measurements.

Parameters

Degrees of Freedom

F Statistic (\(F_{\large s}\))

F Critical (\(F_c\))

Using the degrees of freedom and alpha value the F critical value is read from a F-Distribution Table.

F Distribution

Uncertainty of Calibration Curve (Linear Fit)

Overview

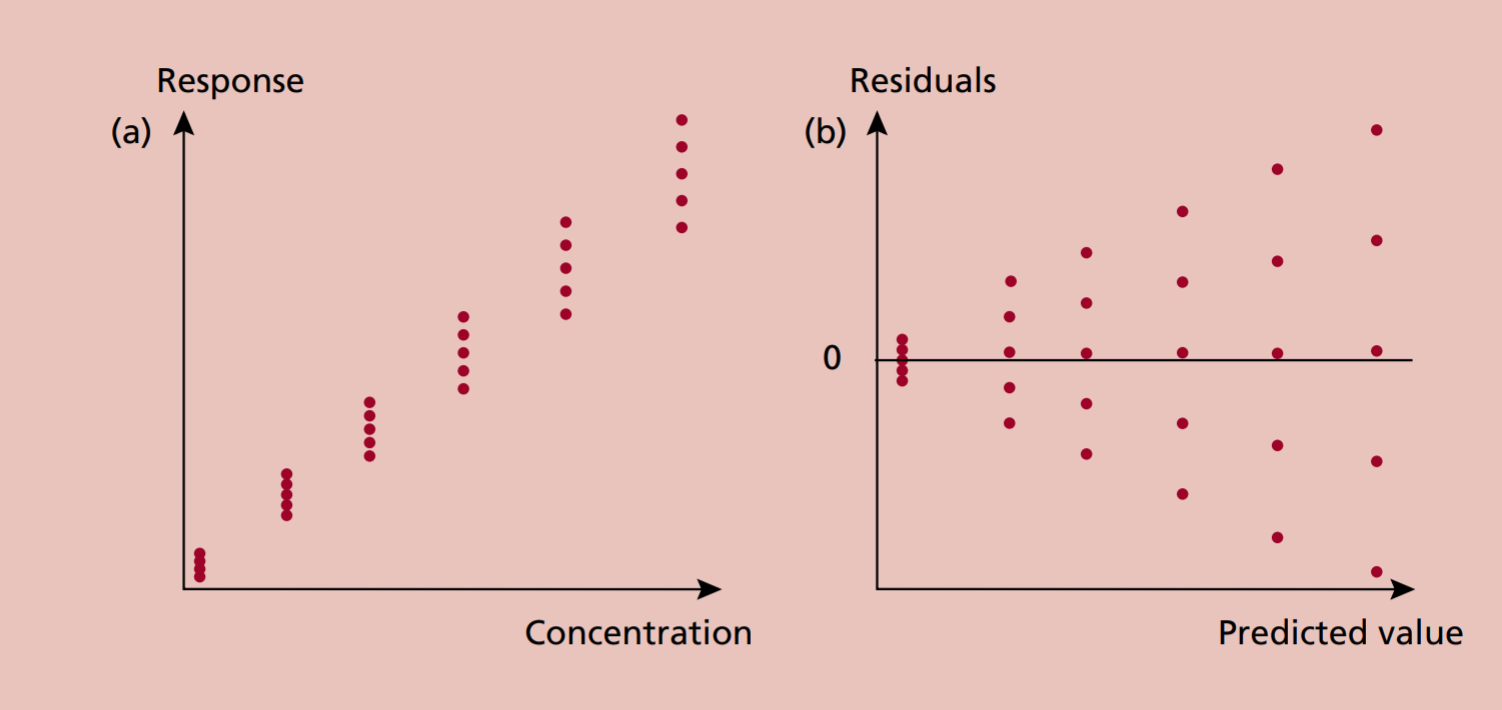

All computations and details of formulas used for computing the uncertainty of the calibration curve are displayed here. A linear fit has been applied on the uploaded data. Optionally, on the start page, you can specify weights if a weighted least squares (WLS) regression is required.

The Method tabs show the main formulas used to compute the linear uncertainty of the calibration curve for both weighted and non-weighted least squares regression.

When using a linear fit, calculations preformed in this application use the WLS formula which is equivalent to a simple linear regression (non-weighted) formula if the weight \(W=1\) is specified.

Where a Quadratic fit is required, please return to the start page and upload your data in the "Quadratic Fit" tab.

Replicates \((r_s)\)

Mean Concentration\((x_s)\)

Step by Step Calculations

Linear Regression

Error Sum of Squares of \(y\)

Uncertainty of Calibration \((u)\)

Relative Standard Uncertainty \((u_r)\)

Uncertainty of Calibration Curve (Quadratic Fit)

Overview

All computations and details of formulas used for computing the uncertainty of the calibration curve are displayed here. A quadratic fit has been applied on the uploaded data.

The Method tab shows the main formulas used to compute the quadratic uncertainty of the calibration curve.

When using a quadratic fit currently it is not possible to specify weights for the regression model.

Where a linear fit is required, please return to the start page and upload your data in the "Linear Fit" tab.

Background

For a general function \(X=f(x_1,x_2,\ldots,x_n)\), the variance \(Var(X)\) by Taylor's theorem (first-order expansion) is given by:

\(\displaystyle Var(X) = \left(\frac{\partial X}{\partial x_1}\right)^2 Var(x_1) + \left(\frac{\partial X}{\partial x_2}\right)^2 Var(x_2) + \ldots + \left(\frac{\partial X}{\partial x_n}\right)^2 Var(x_n) + \\ \displaystyle \hspace{3em} 2\left(\frac{\partial X}{\partial x_1}\right) \left(\frac{\partial X}{\partial x_2}\right) Cov(x_1,x_2) + 2\left(\frac{\partial X}{\partial x_1}\right) \left(\frac{\partial X}{\partial x_3}\right) Cov(x_1,x_3) + \ldots \)

Using the approach described by D. Brynn Hibbert: The uncertainty of a result from a linear calibration (2006), a quadratic model of the form \(y=b_0 + b_1x + b_2x^2\) can be rewritten as \(y - \overline{y} = b_1(x-\overline{x}) + b_2(x^2 - \overline{x^2})\) to make the curve start from the origin. Doing this removes the covariance dependence of \(b_0\) with \(b_1\) and \(b_2\).

Given an instrument response of case sample peak area ratio \(y_s\), the level of concentration \(x_s\) is estimated by solving for \(x\) as:

\( \displaystyle \hat{x_s} = \frac{ -b_1 \sqrt{ b_1^2-4b_2( \overline{y}-y_s-b_1 \overline{x}-b_2 \overline{x^2}) } } {2b_2} \)

The standard uncertainty \(u\text{(CalCurve)}\) is then obtained by apply Taylor's theorem to the variance of \(\hat{x}_s\).

Method

Using the approach described in D. Brynn Hibbert: The uncertainty of a result from a linear calibration (2006) the uncertainty of the quadratic curve is given by:

\(\displaystyle u\text{(CalCurve)}^2 = \left(\frac{\partial \hat{x_s}}{\partial b_1}\right)^2 Var(b_1) + \left(\frac{\partial \hat{x_s}}{\partial b_2}\right)^2 Var(b_2) + \left(\frac{\partial \hat{x_s}}{\partial \overline{y}}\right)^2 Var(\overline{y}) + \\ \displaystyle \hspace{3em}\left(\frac{\partial \hat{x_s}}{\partial y_s}\right)^2 Var(y_s) + 2\left(\frac{\partial \hat{x_s}}{\partial b_1}\right) \left(\frac{\partial \hat{x_s}}{\partial b_2}\right) Cov(b_1,b_2)\)

Note: As described in the overview, this formula removes the covariance dependency of \(b_0\) with the other parameters \(b_1\) and \(b_2\) by making the model start from the origin [Pg. 4/5 - D. Brynn Hibbert (2006)].

The Relative Standard Uncertainty is then given by:

\(\displaystyle u_r\text{(CalCurve)} = \frac{u\text{(CalCurve)}}{x_s}\)

The partial derivatives is obtained by differentiating the equation below with respect to \(b_1, b_2, \overline{y}, y_0\)

\(\displaystyle\hat{x_s} = \frac{-b_1\sqrt{b_1^2-4b_2(\overline{y}-y_s-b_1\overline{x}-b_2\overline{x^2})}}{2b_2}\)

Note: It is assumed that the regression parameters are independent and that \(Var(\overline{x}) = 0\) and \(Var(\overline{x^2}) = 0\).

The Covariance Matrix for \(Var(b_1)\), \(Var(b_2)\) and \(Cov(b_1,b_2)\) can be estimated as:

\(\sigma^2(\underline{X}^T\underline{X})^{-1} = \begin{Bmatrix} Var(b_0) & Cov(b_0,b_1) & Cov(b_0,b_2) \\ Cov(b_0,b_1) & Var(b_1) & Cov(b_1,b_2) \\ Cov(b_0,b_2) & Cov(b_1,b_2) & Var(b_2) \\ \end{Bmatrix}\)

where \(\sigma^2\) is the variance of \(y\) estimated by the Standard Error of Regression squared \(S_{y/x}^2\):

\(\displaystyle S_{y/x} = \sqrt{\frac{\sum\limits_{i=1}^n (y_i-\hat{y}_i)^2}{n-3}}\)

- \(x_i\) concentration at level \(i\).

- \(x_s\) is the mean concentration of the Case Sample.

- \(r_s\) is the number of replicates made on test sample to determine \(x_s\).

- \(y_i\) observed peak area ratio for a given concentration \(x_i\).

- \(\hat{y}_i\) predicted value of \(y\) for a given value \(x_i\).

- \(b_1\) is the Slope of the of regression line.

- \(y_s\) is the mean of Peak Area Ratio of the Case Sample.

- \(n\) is the number of measurements used to generate the Calibration Curve.

- \(\overline{x}\) is the mean values of the different calibration standards.

- \(Var(\overline{y})\) is the variance of the mean of \(y\), given as: \(\frac{S_{y/x}^2}{n}\).

- \(Var(y_s)\) is the variance of the mean of Case Sample Peak Area Ratios \((y_s)\), given as: \(\frac{S_{y/x}^2}{r_s}\)

Replicates \((r_s)\)

Mean Concentration\((x_s)\)

Mean Peak Area Ratio\((y_s)\)

Calculations

Quadratic Regression

Standard Error of Regression \((S_{y/x})\)

Variance of \(y_s\)

Variance of \(\overline{y}\)

Deriving Partial Derivatives

The partial derivatives is obtained by differentiating the equation below with respect to \(b_1, b_2, \overline{y}, y_0\)

\(\displaystyle\hat{x_s} = \frac{-b_1\sqrt{b_1^2-4b_2(\overline{y}-y_s-b_1\overline{x}-b_2\overline{x^2})}}{2b_2}\)

For simplicity let discriminant \((D)\) be equal to:

Deriving Covariance Matrix

The covariance matrix \(S_{y/x}^2(\underline{X}^T\underline{X})^{-1}\) is derived by taking a transpose of the design matrix and then multiplying it by the design matrix, after which an inverse is taken and multiplied by the variance \(S_{y/x}^2\).

Design Matrix

Design Matrix Transposed

Multiply

Inverse

Covariance Matrix

Uncertainty of Calibration \((u)\)

Relative Standard Uncertainty \((u_r)\)

Uncertainty of Method Precision

Overview

A step-by-step approach for estimating the uncertainty of method precision is outlined here. The main methodology used is the pooled standard deviation approach.

Where a precision experiment is carried out for different nominal values of concentration (e.g. low, medium and high), the uncertainty of method precision is calculated for each nominal value separately and the uncertainty used for the combined uncertainty is the value for which the specified case sample concentration is closest to the nominal value.

To derive the relative standard uncertainty, the standard uncertainty is divided by the number of case sample replicate as recommended by Kadis (2017).

Method

- \(S\) is the standard deviation for each run.

- \({\large\nu}\) is the individual degrees of freedom.

- \(S_p\) is the pooled standard deviation.

- \(NV\) is the nominal value of concentration.

- \(\overline{x}_{\text{(NV)}}\) is the mean concentration for the nominal value \(\text{NV}\).

Replicates \((r_s)\)

Mean Concentration\((x_s)\)

Step by Step Calculation

Sum of Degrees of Freedom \(({\large\nu}_\text{(NV)})\)

Mean Concentration of \(\text{NV}\) \((\overline{x}_\text{(NV)})\)

Sum of \((S^2 \times {\large\nu})_\text{(NV)}\)

Pooled Standard Deviation \((S_p)\)

Standard Uncertainty \((u)\)

Realtive Standard Uncertainty \((u_r)\)

Uncertainty of Method Precision

Uncertainty of Calibration Standard

Overview

Information provided on the structure of solution preparation and details of used equipment is displayed here, along with a step-by-step calculation of the uncertainty associated with spiking the calibration standards.

The solution structure is displayed using a network or tree diagram with the root assumed to be the reference compound and the final nodes are assumed to be the spiking range for calibrators used in generating the calibration curve.

If more than one spiking range exists (which may be due to splitting the range of the calibration curve), the uncertainty associated with the calibration standard is computed by pooling the relative standard uncertainties associated with preparing solutions and spiking the calibration curve.

Method

- \(\text{Parent Solution}\) is the solution from which a given solution is made.

- \(N\text{(Equipment)}\) is the number of times a piece of equipment is used in the preparation of a given solution.

Standard Uncertainty \((u)\)

Relative Standard Uncertainty \((u_r)\)

Relative Standard Uncertainty of Solutions

Overall Relative Standard Uncertainty of Calibration Standard

Uncertainty of Sample Preparation

Overview

Uncertainty of sample preparation combines uncertainty sources from the use of different equipment in preparing the sample, such as weighing balance, pipette and volumetric flask.

Method

- \(N\text{(Equipment)}\) is the number of times a piece of equipment is used in taking the preparation of a given sample.

Standard Uncertainty

Relative Standard Uncertainty

Overall Relative Standard Uncertainty

Combined Uncertainty

Overview

The combined uncertainty is obtained by combining all the individual uncertainty components.

If data is uploaded for all the uncertainty components; Homogeneity, Calibration Curve, Method Precision, Calibration Standard and Sample Preparation, relative standard uncertainty is computed for each uncertainty component and are combined to obtain the Combined Uncertainty of the analytical process.

If data is omitted for some uncertainty components, NA's will be displayed for those components and the Combined Uncertainty will only take into account components for which data is provided.

Method

- Where \(x_s\) is the Case Sample Mean Concentration.

\(u_r\text{(Homogeneity)}\)

\(u_r\text{(CalCurve)}\)

\(u_r\text{(MethodPrec)}\)

\(u_r\text{(CalStandard)}\)

\(u_r\text{(SamplePreparation)}\)

Mean Concentration\((x_s)\)

Combined Uncertainty

Uncertainty Budget

Coverage Factor

Overview

Coverage factor \((k)\) is a number usually greater than one from which an expanded uncertainty is obtained when \(k\) is multiplied by a combined standard uncertainty. To determine a suitable coverage factor, a specified level of confidence is required along with knowledge about the degrees of freedom of all uncertainty components.

An effective degrees of freedom is computed using the Welch-Satterthwaite equation (JCGM 100:2008) with details given in the Method tab. The derived effective degrees of freedom along with the specified \({\small CI\%}\) is used to read a value (termed coverage factor) from the T-Distribution Table.

Method

The effective degrees of freedom \(({\LARGE\nu}_{\text{eff}})\) using Welch-Satterthwaite approximation for relative standard uncertainty is given by:

$${\LARGE\nu}_{\text{eff}} =\frac{(\frac{\text{CombUncertainty}}{x_s})^4}{\sum{\frac{u_r\text{(Individual Uncertainty Component)}^4}{{\LARGE\nu}_{\text{(Individual Uncertainty Component)}}}}}$$

The coverage factor \((k_{{\large\nu}_{\text{eff}}, {\small CI\%}})\) is read from the T-Distribution Table using the calculated \({\Large\nu}_{\text{eff}}\) and specified \({\small CI\%}\).

- \(x_s\) is the Case Sample Mean Concentration.

- \(\nu\) is the Degrees of Freedom for each uncertainty component.

- \({\small\text{CombUncertainty}}\) is the Combined Uncertainty of the individual uncertainty components.

\(u_r\text{(Homogeneity)}\)

\(u_r\text{(CalCurve)}\)

\(u_r\text{(MethodPrec)}\)

\(u_r\text{(CalStandard)}\)

\(u_r\text{(SamplePreparation)}\)

\(\text{CombUncertainty}\)

Mean Concentration\((x_s)\)

Degrees of Freedom of Homogeneity

Degrees of Freedom of Calibration Curve

Degrees of Freedom of Method Precision

Degrees of Freedom of Calibration Standard

Degrees of Freedom of Sample Preparation

Effective Degrees of Freedom

T-Distribution Table

Coverage Factor

Expanded Uncertainty

Overview

The expanded uncertainty is the final step of measurement uncertainty computation. This is done in order to derive a confidence interval believed to contain the true unknown value.

It is computed by multiplying the Combined Uncertainty \((\text{CombUncertainty})\) with the Coverage Factor \((k_{{\large\nu}_{\text{eff}}, {\small CL\%}})\).

Method

The expanded uncertainty \(\text{(ExpUncertainty)}\) is given by:

$$\text{ExpUncertainty} = \text{CoverageFactor} \times \text{CombUncertainty}$$

Where the \(\text{CoverageFactor}\) is \(k\) when a value has been manually specified or \(k_{{\large\nu}_{\text{eff}}, {\small CL\%}}\) when a Confidence Level \(CL\%\) has been specified.

The percentage expanded uncertainty can then be given by:

$$\text{%ExpUncertainty} = \frac{\text{ExpUncertainty}}{x_s} \times 100$$

where:

- \(k_{{\large\nu}_{\text{eff}}, {\small CL\%}}\) is the coverage factor based on effective degrees of freedom and specified confidence level percentage.

- \({\small\text{CombUncertainty}}\) is the combined uncertainty.

- \(x_s\) case sample mean concentration.

\(\text{CombUncertainty}\)

Mean Concentration\((x_s)\)

Expanded Uncertainty

Percentage Expanded Uncertainty

Replicates \((r_s)\)

Mean Concentration\((x_s)\)

Uncertainty of Homogeneity

Homogeneity Test Result

Uncertainty of Calibration Curve

Uncertainty of Method Precision

Uncertainty of Calibration Standard

Uncertainty of Sample Preparation

Combined Uncertainty

Coverage Factor

Expanded Uncertainty

% Expanded Uncertainty

Results

Download Report

For reporting purposes and report can be downloaded and stored for all the results uploaded.

Download Report